Bringing Deep Learning Workloads to JSC supercomputers

Data loading

Alexandre Strube // Sabrina Benassou

September 18, 2024

Schedule for day 2

| Time | Title |

|---|---|

| 10:00 - 10:15 | Welcome, questions |

| 10:15 - 11:30 | Data loading |

| 11:30 - 12:00 | Coffee Break (flexible) |

| 12:30 - 14:00 | Parallelize Training |

Let’s talk about DATA

- Some general considerations one should have in mind

I/O is separate and shared

All compute nodes of all supercomputers see the same files

- Performance tradeoff between shared acessibility and speed

- It’s simple to load data fast to 1 or 2 gpus. But to 100? 1000? 10000?

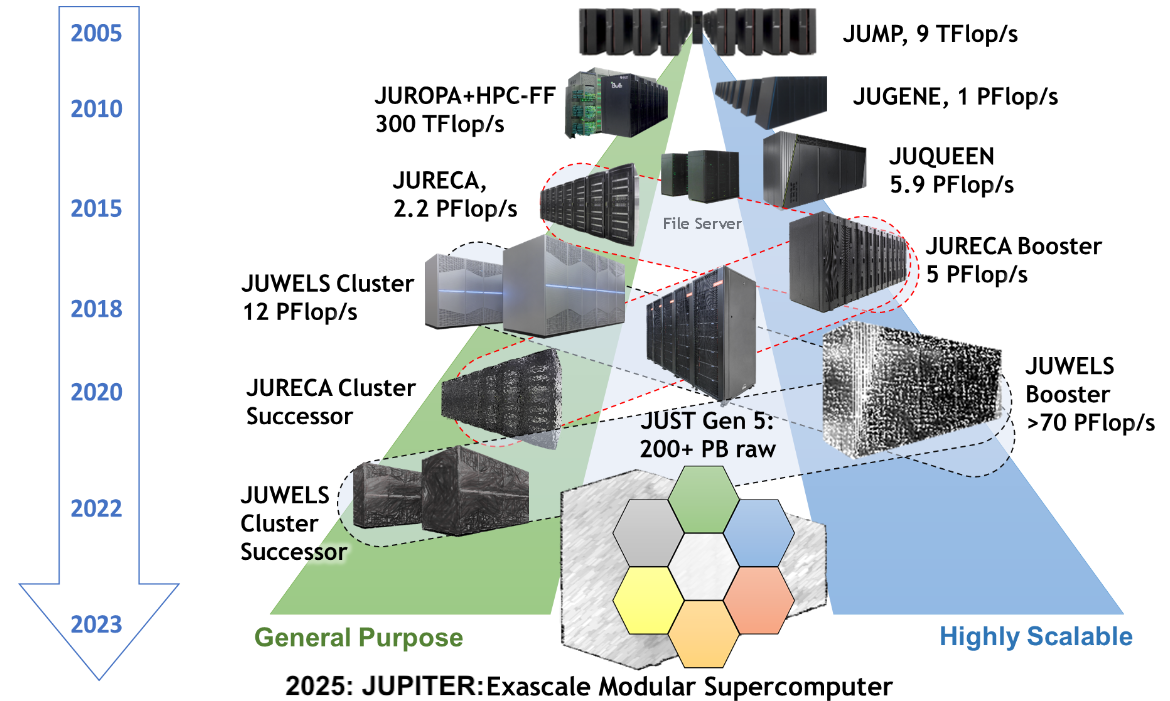

Jülich Supercomputers

- Our I/O server is almost a supercomputer by itself

![JSC Supercomputer Stragegy]()

JSC Supercomputer Stragegy

Where do I keep my files?

$PROJECT_projectnamefor code (projectnameistraining2434in this case)- Most of your work should stay here

$DATA_projectnamefor big data(*)- Permanent location for big datasets

$SCRATCH_projectnamefor temporary files (fast, but not permanent)- Files are deleted after 90 days untouched

Data services

- JSC provides different data services

- Data projects give massive amounts of storage

- We use it for ML datasets. Join the project at Judoor

- After being approved, connect to the supercomputer and try it:

Data Staging

- LARGEDATA

filesystem is not accessible by compute nodes

- Copy files to an accessible filesystem BEFORE working

- Imagenet-21K copy alone takes 21+ minutes to

$SCRATCH

- We already copied it to $SCRATCH for you

Data loading

Strategies

- We have CPUs and lots of memory - let’s use them

- multitask training and data loading for the next batch

/dev/shmis a filesystem on ram - ultra fast ⚡️

- Use big files made for parallel computing

- HDF5, Zarr, mmap() in a parallel fs, LMDB

- Use specialized data loading libraries

- FFCV, DALI, Apache Arrow

- Compression sush as squashfs

- data transfer can be slower than decompression (must be checked case by case)

- Beneficial in cases where numerous small files are at hand.

Libraries

- Apache Arrow https://arrow.apache.org/

- FFCV https://github.com/libffcv/ffcv and FFCV for PyTorch-Lightning

- Nvidia’s DALI https://developer.nvidia.com/dali

We need to download some code

The ImageNet dataset

Large Scale Visual Recognition Challenge (ILSVRC)

- An image dataset organized according to the WordNet hierarchy.

- Extensively used in algorithms for object detection and image classification at large scale.

- It has 1000 classes, that comprises 1.2 million images for training, and 50,000 images for the validation set.

The ImageNet dataset

ILSVRC

|-- Data/

`-- CLS-LOC

|-- test

|-- train

| |-- n01440764

| | |-- n01440764_10026.JPEG

| | |-- n01440764_10027.JPEG

| | |-- n01440764_10029.JPEG

| |-- n01695060

| | |-- n01695060_10009.JPEG

| | |-- n01695060_10022.JPEG

| | |-- n01695060_10028.JPEG

| | |-- ...

| |...

|-- val

|-- ILSVRC2012_val_00000001.JPEG

|-- ILSVRC2012_val_00016668.JPEG

|-- ILSVRC2012_val_00033335.JPEG

|-- ...The ImageNet dataset

imagenet_train.pkl

{

'ILSVRC/Data/CLS-LOC/train/n03146219/n03146219_8050.JPEG': 524,

'ILSVRC/Data/CLS-LOC/train/n03146219/n03146219_12728.JPEG': 524,

'ILSVRC/Data/CLS-LOC/train/n03146219/n03146219_9736.JPEG': 524,

...

'ILSVRC/Data/CLS-LOC/train/n03146219/n03146219_7460.JPEG': 524,

...

}imagenet_val.pkl

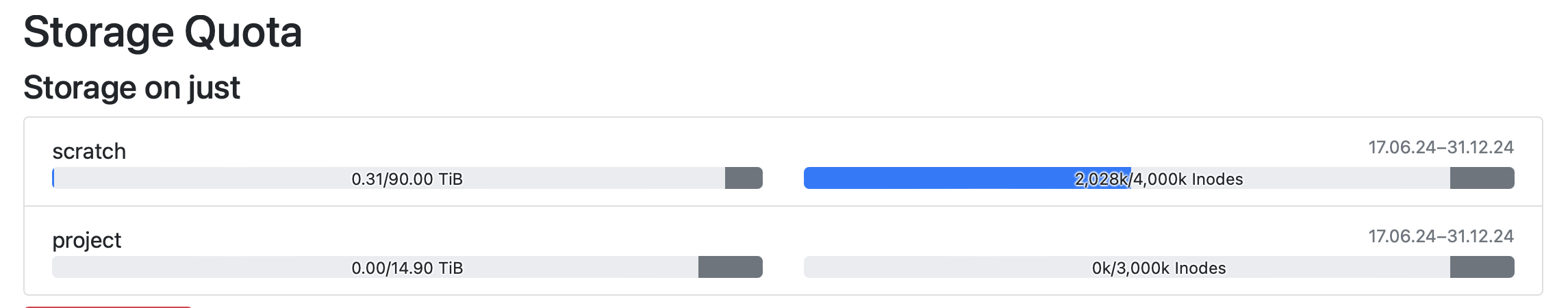

Access File System

Inodes

- Inodes (Index Nodes) are data structures that store metadata about files and directories.

- Unique identification of files and directories within the file system.

- Efficient management and retrieval of file metadata.

- Essential for file operations like opening, reading, and writing.

- Limitations:

- Fixed Number: Limited number of inodes; no new files if exhausted, even with free disk space.

- Space Consumption: Inodes consume

disk space, balancing is needed for efficiency.

![]()

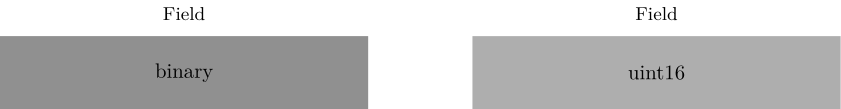

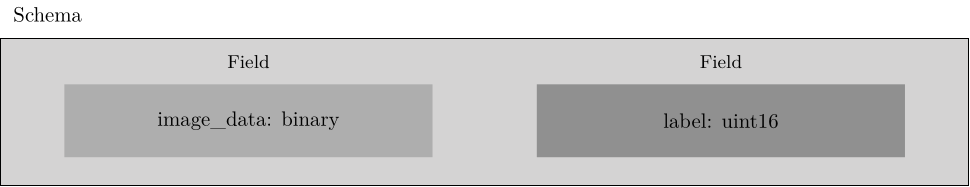

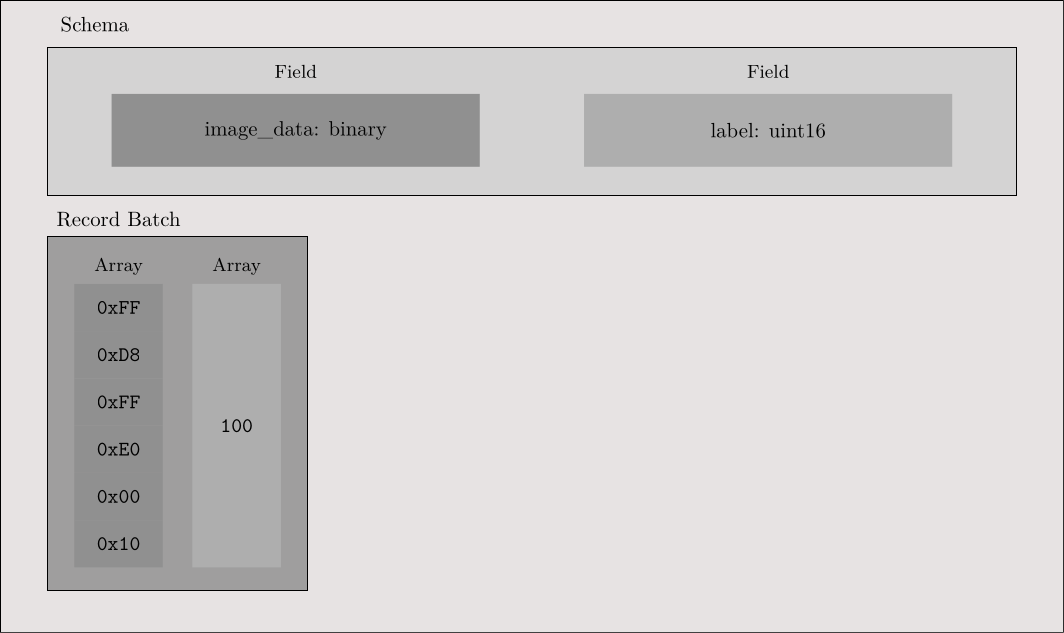

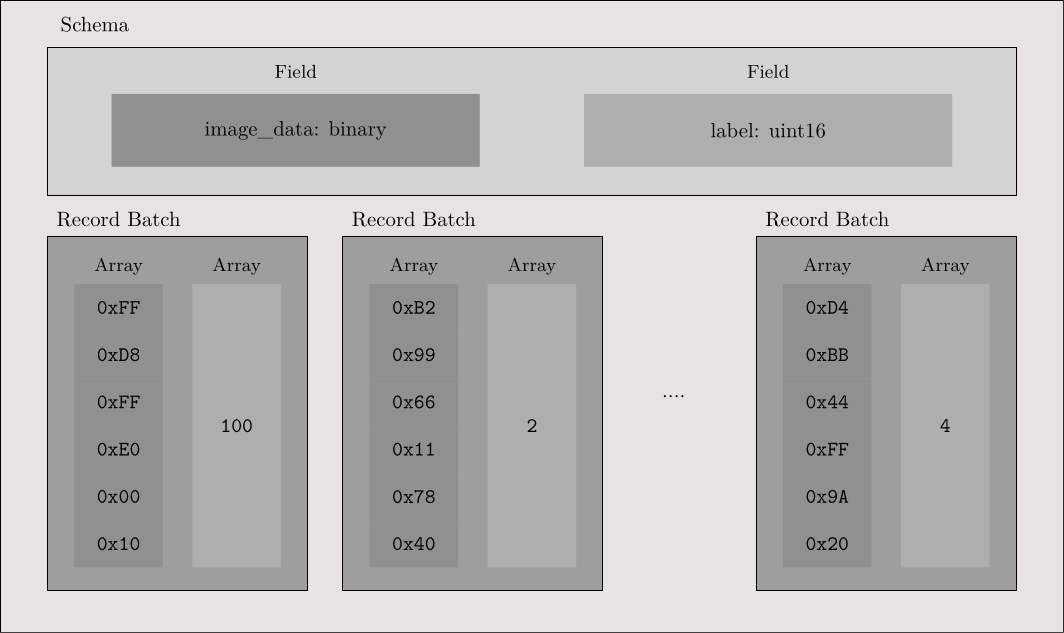

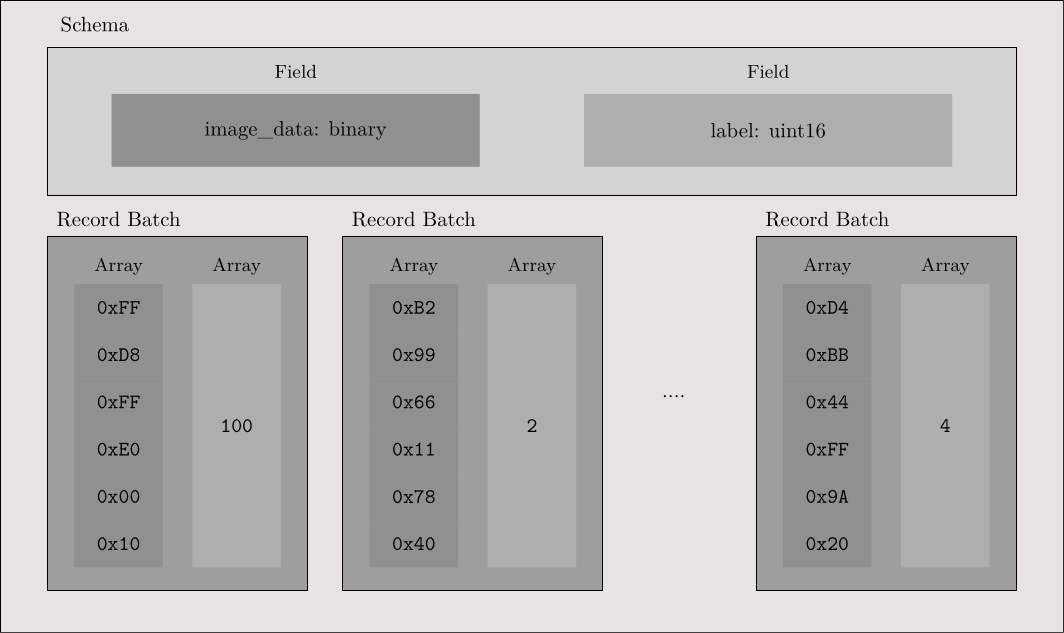

Pyarrow File Creation

Pyarrow File Creation

Pyarrow File Creation

Pyarrow File Creation

Pyarrow File Creation

Access Arrow File

def __getitem__(self, idx):

if self.arrowfile is None:

self.arrowfile = pa.OSFile(self.data_root, 'rb')

self.reader = pa.ipc.open_file(self.arrowfile)

row = self.reader.get_batch(idx)

img_string = row['image_data'][0].as_py()

target = row['label'][0].as_py()

with io.BytesIO(img_string) as byte_stream:

with Image.open(byte_stream) as img:

img = img.convert("RGB")

if self.transform:

img = self.transform(img)

return img, targetHDF5

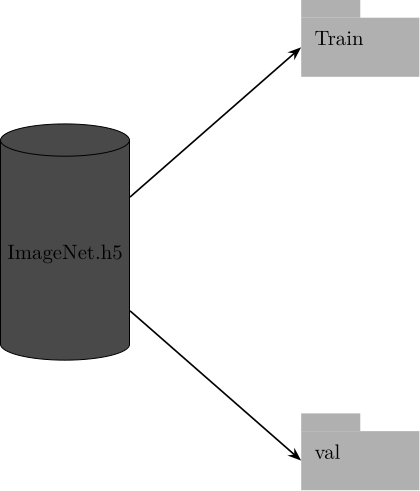

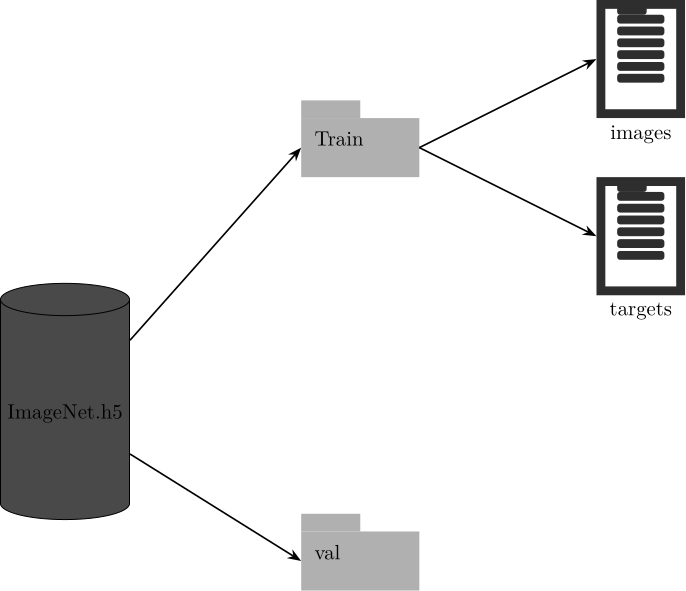

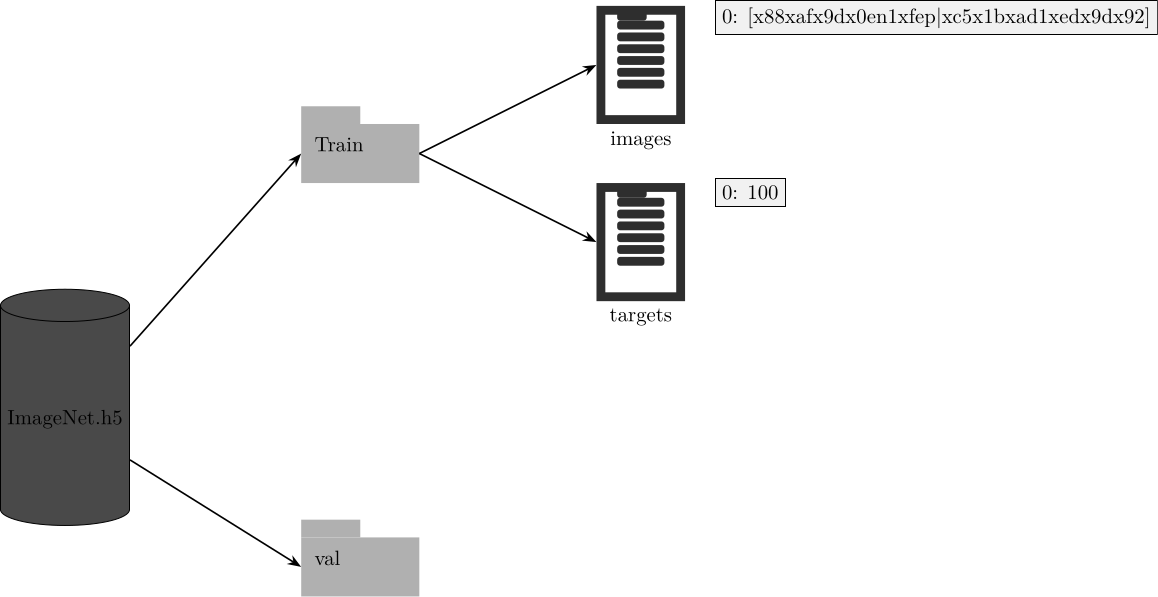

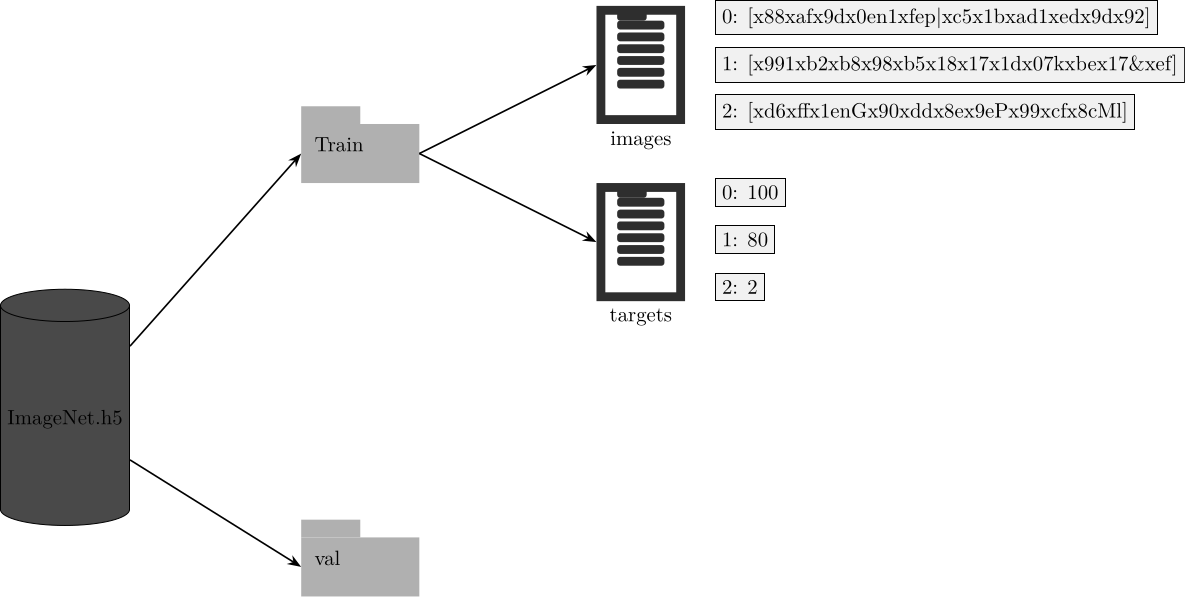

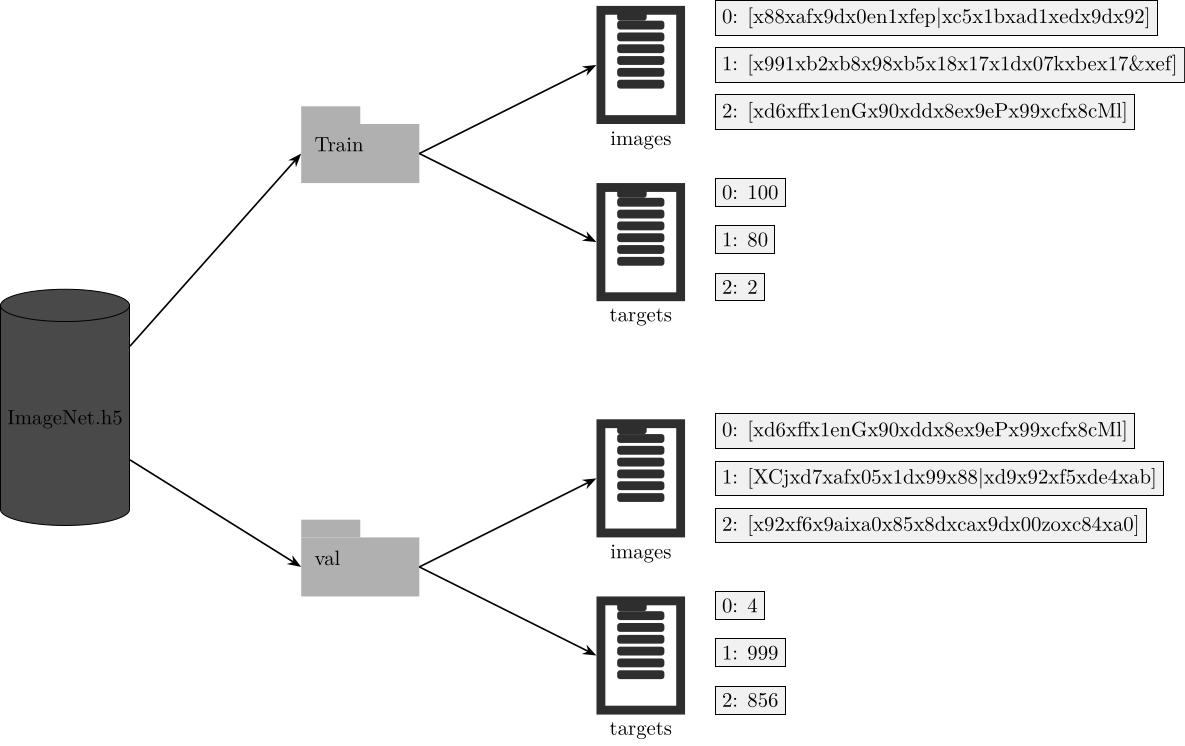

HDF5

HDF5

HDF5

HDF5

HDF5

Access h5 File

def __getitem__(self, idx):

if self.h5file is None:

self.h5file = h5py.File(self.train_data_path, 'r')[self.split]

self.imgs = self.h5file["images"]

self.targets = self.h5file["targets"]

img_string = self.imgs[idx]

target = self.targets[idx]

with io.BytesIO(img_string) as byte_stream:

with Image.open(byte_stream) as img:

img = img.convert("RGB")

if self.transform:

img = self.transform(img)

return img, targetDEMO

Exercise

- Could you create an arrow file for the flickr

dataset stored in

/p/scratch/training2434/data/Flickr30K/and read it using a dataloader ?